Last Updated on December 18, 2024 by Editor

Did you know GPT-4, OpenAI’s latest model, has 1.8 trillion parameters? This is over 10 times more than GPT-3. This significant increase in size and complexity is transforming the field of Natural Language Processing (NLP). It’s making machines understand and speak like humans with amazing skill.

Large Language Models (LLMs) like GPT-3/GPT-4, Claude 3/Claude 3.5, and Gemini 1.5 are key in many areas. They help with content, business, healthcare, education, and various sectors of science and technology These models, trained on huge texts, can do complex tasks with accuracy and detail we never thought possible.

In this article, you’ll learn about LLMs and their role in NLP’s future. You’ll see how they work and their impact on different fields. You’ll also find out about the different types of LLMs and their uses.

The AI market is projected to reach $190 billion by 2025, driven largely by advancements in LLMs.

Key Takeaways

- Large Language Models (LLMs) are changing NLP by making machines talk and write like us.

- Models like GPT-4, Claude 3, and Gemini 1.5 generate text that is contextually relevant, making them highly valuable across various industries.

- LLMs are used in many fields, from customer service and healthcare to education and creative work.

- The size and complexity of LLMs, like GPT-4 with approximately 1.8 trillion parameters, pose significant challenges for resources and computing power.

- The training process for LLMs is resource-intensive, requiring significant computational power and large datasets, which raises concerns about accessibility and environmental impact.

- LLMs are revolutionizing digital communication by enabling more natural interactions between humans and machines, enhancing user experiences across various platforms.

- As LLMs become more integrated into everyday applications, understanding their limitations and potential biases is crucial for responsible use in sensitive areas like healthcare and education.

- It’s important to think about ethics, such as bias and fairness, and data privacy when using LLMs.

- Emerging models like Claude and Gemini are pushing the boundaries of what LLMs can do, emphasizing safety, multimodal capabilities, and ethical considerations in AI development.

- Future developments in LLMs will likely focus on improving ethical standards ensuring that AI systems are transparent, fair, and accountable in their operations.

![Large Language Models (LLMs) Explained: The Journey from Eliza to GPT-4 and the Future of Natural Language Processing (NLP) [Key Takeaways Infograph]](https://uniaiversity.com/wp-content/uploads/2024/11/llms-ai-s-new-voice_uniAIversity.png)

What Are Large Language Models: Core Concepts and Foundations

Large language models (LLMs) are advanced neural networks that understand and create human language. They are trained on vast datasets, including books and websites. This helps them understand grammar, context, and cultural references.

Definition and Basic Principles

LLMs use deep learning and transformer models to work with text. They are great at many tasks, such as chatbots and language translation.

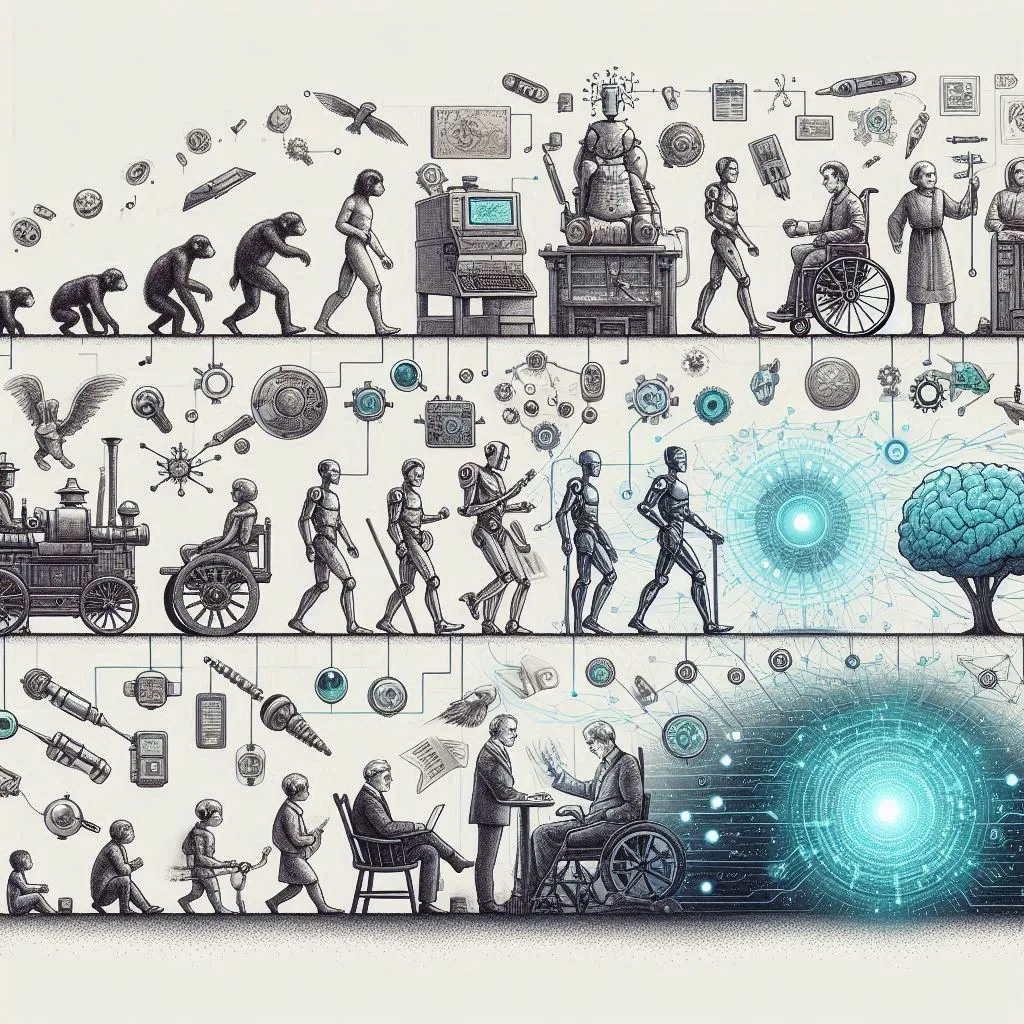

Evolution of Language Models

The evolution of language models has been remarkable. Early models eventually evolved into today’s advanced deep learning systems. One of the first attempts at natural language processing was Eliza, a program developed at MIT in 1966 by Joseph Weizenbaum. Eliza used simple pattern matching and substitution to mimic conversation, giving users the illusion that it understood them.

The real transformation began in 2017 with the introduction of the transformer architecture by Vaswani and others in their paper ‘Attention Is All You Need.’ This new model relied entirely on attention mechanisms, removing the need for older techniques like recurrence and convolutions. Soon after, Google introduced BERT (Bidirectional Encoder Representations from Transformers) in 2018, a model that reads text in both directions, making it much better at understanding context.

In 2019, GPT-2 by OpenAI marked another big step forward with its 1.5 billion parameters, showing impressive performance in generating coherent text. The most recent advancement is GPT-4, which has about 1.8 trillion parameters and uses a system called the “Mixture of Experts” (MoE). This setup allows only the relevant parts of the model to activate for specific tasks, making it highly scalable and efficient.

Key Components of LLMs

LLMs’ success comes from their main parts. These include attention mechanisms and self-supervised learning. These models also utilize transfer learning. These features help LLMs work well with language, making them key in AI and NLP.

Large language models are trained using massive datasets, enabling them to perform a wide range of NLP tasks with impressive accuracy and versatility.

As natural language processing continues to evolve, LLMs will become increasingly significant. They will shape the future of digital communication and AI.

The ability of LLMs to process and generate human-like text at scale is revolutionizing how we approach natural language processing and communication.

How LLMs Work: Training Process and Architecture

Large Language Models (LLMs) use deep learning to understand and create human-like language. They learn from huge amounts of text data, picking up on word and sentence patterns. The transformer architecture, with its self-attention mechanisms, is key to many LLMs.

Training LLMs is very demanding, needing lots of computing power like GPUs or TPUs. These models have billions or even trillions of parameters, making them massive and requiring substantial resources to train. The process involves showing the model lots of text, breaking it down into tokens, and tweaking it for better performance.

The self-attention mechanism in Transformers helps LLMs focus on the most important parts of a sentence. This improves their grasp of context and text clarity. The multi-head attention mechanism lets them look at different parts of a sentence at once, giving them a deeper understanding of language.

Pre-trained LLMs can be fine-tuned for many NLP tasks, like text creation, translation, and summarization. This makes them useful in marketing, customer service, science, and education. Their ability to adapt and perform well in various areas is what makes them so valuable.

The environmental cost of training large models is significant; a single training run can emit as much carbon as five cars in their lifetime.

Understanding Large Language Models (LLMs): The Future of NLP

Large language models (LLMs) are getting better fast. Models like GPT-4 are doing more tasks well. They have billions of parameters and learn from lots of text. This lets them understand language like humans do.

LLMs are changing how we talk to machines. They make chatbots, virtual assistants, and customer support better.

Emerging Trends in Natural Language Processing

LLMs are set to get even better. Soon, we’ll have LLMs made just for certain areas like healthcare and law. They’ll give more accurate answers in these fields.

We’ll also see LLMs that can handle different types of media. This means they can work with text, images, audio, and video.

Impact of LLMs on Digital Communication

LLMs are making digital talk better. They help chatbots and virtual assistants talk more like people. They also make it easier to get information by translating and summarizing things in real time.

But, as LLMs get used more, we need to think about fairness and accuracy.

| Key Trends in Large Language Models | Impact |

| Increased Scale and Specialization | LLMs with trillions of parameters and tailored for specific domains will deliver more precise and relevant outcomes |

| Multimodal Capabilities | LLMs will be able to process and generate content across text, graphics, audio, and video, enhancing user experiences |

| Workplace Transformation | LLMs may replace certain professions, while also boosting productivity and efficiency |

| Ethical Considerations | Addressing bias, accuracy, and consent will be crucial as LLMs see wider adoption |

As LLMs get better, they’ll change how we talk to machines and work in many fields. They’ll help humans and machines work together better than ever.

Types of Large Language Models and Their Capabilities

The world of natural language processing has seen a big change with Large Language Models (LLMs). Each model has its own special features and uses, evolving from the first GPT models to the latest models like BERT, XLNet, and T5, which have significantly transformed how we understand and create language.

OpenAI’s GPT Series

OpenAI has led the way in this evolution with models like GPT-2, GPT-3, and the most recent, GPT-4. These models have made huge strides in creating content and understanding speech. GPT-4’s architecture is thought to use a “Mixture of Experts” (MoE) system, enabling different parts of the model to activate for specialized tasks. This setup means that not all 1.8 trillion parameters are used simultaneously; only relevant “experts” are activated per task, making the model highly scalable and efficient in handling complex NLP tasks. GPT-3, in particular, has brought changes to how conversational AI and customer service operate, bringing more sophisticated and natural responses.

The latest model, GPT-4, has established a new standard. It has improved multilingual skills, few-shot learning, and enhanced performance overall. The future of LLMs looks very promising for changing industries and redefining how we interact with technology.

Google and Meta’s Contributions

Google and Meta have also contributed significantly with models like BERT, T5, M2M-100, and LLaMA. These models have improved the handling of different languages and tasks in LLMs. BERT and T5 are widely known for their flexibility and robustness in many NLP tasks. Meta’s LLaMA models, especially LLaMA 2, offer efficient performance at smaller scales, making them competitive with larger models. LLaMA’s design emphasizes scalable solutions without compromising on output quality.

Facebook AI Research’s XLM-R is another powerful model that showcases the potential of transformer-based models across multiple languages. MPNet, which combines masked and permuted language modeling, further builds on BERT’s work, advancing the performance in understanding diverse language structures.

Emerging LLMs in the AI Landscape

In addition to the well-known models like GPT, BERT, and T5, several new LLMs are gaining attention in the field. Claude, developed by Anthropic, emphasizes safety and alignment with human values. Its training approach focuses on ethical practices, addressing essential discussions around data privacy and responsible development. LLaMA by Meta provides efficient performance at smaller scales, making it an accessible option that competes effectively with larger models by focusing on scalability without sacrificing quality.

Gemini from Google DeepMind, a multimodal model expected to combine text and visual capabilities, represents the future of LLMs as multimodal capabilities are increasingly valued in NLP. Mistral is known for its open-weight and computationally efficient design, appealing to users interested in accessible LLM options that support decentralized AI solutions. Bloom is a prominent open-source and multilingual model, valuable for global NLP applications, as it expands the application of LLMs across languages and regions.

New models like Claude focus on safety and ethical AI practices while LLaMA offers efficient performance at smaller scales.

Applications and Use Cases Across Industries

Large language models (LLMs) are now used in many fields. They help make businesses run smoother, improve healthcare, and enhance learning. These AI systems are getting better and more useful all the time.

Business Applications

In business, LLMs create smart chatbots for better customer service. They also help make marketing materials and product descriptions. Plus, they analyze data to help businesses make decisions.

Healthcare and Scientific Research

In healthcare, LLMs change how we do research and care for patients. They look through lots of medical papers and help with diagnosis. They also make clinical notes easier to manage.

In science, LLMs help find new ideas and analyze data. They make it easier to understand different subjects together.

The use of LLMs in healthcare is expected to grow by 35% annually, highlighting their increasing importance in diagnostics and patient care.

Education and Learning

LLMs are also changing education. They make learning more personal and help with homework. They can even create educational materials like lesson plans.

They’re good at writing and translating too. This makes them very useful in schools.

As LLMs get better, they will change many industries even more. They will make businesses run smoother, improve healthcare, and change education. These AI systems are really making a big difference.

Challenges and Limitations of LLMs

Large Language Models (LLMs) have made huge strides in AI. Yet, they face many challenges and limitations. One big issue is the need for lots of computing power to train and use these models. The shift from old AI systems to neural networks has made PLMs more common.

Another problem is bias in the training data. These models learn from huge datasets, which can include biases. This leads to biased outputs. Also, LLMs can create false but believable information, known as “hallucination.” This can spread wrong information and requires strong checks.

Getting high-quality and diverse training data is hard for LLM developers. Making the model bigger and training it more helps it learn better. But, LLMs need a lot of resources, which worries about their environmental effect and who can use them.

Despite these issues, LLMs are a big deal in AI research. They do a great job of understanding and making text like humans, better than smaller models. We need to work on making LLMs more understandable and addressing ethical concerns to use them fully.

In summary, LLMs have a lot of promise but need careful handling. We must tackle problems like computing needs, data quality, bias, and hallucination. This way, we can unlock LLMs’ full potential for better AI and learning.

As LLMs become more common, we need to focus on responsible AI development to ensure fairness and accountability.

Ethical Considerations and Data Privacy

Large language models (LLMs) are changing how we process language. But, they raise big questions about ethics and privacy. These AI systems are promising for many fields like healthcare and education. Yet, we must think carefully about how to use them right.

Bias and Fairness Issues

LLMs can show biases in their answers. This is because they learn from data that might be unfair. We need to make sure they treat everyone fairly.

Data Protection Concerns

Training LLMs needs a lot of data, which is a big privacy worry. They could remember personal info, which is a big risk. We must protect data well and follow laws like the EU AI Act.

Responsible AI Development

Creating AI responsibly is key. We need to make sure AI is open, accountable, and fair. Working together, we can make sure AI is safe and ethical.

As LLMs become more common, we must keep focusing on ethics and privacy. By tackling these big issues, we can use AI’s power wisely and trust it.

The Impact of GPT-4 and Future Developments

The latest version of OpenAI’s language model, GPT-4, has brought a new level of GPT-4 capabilities and multimodal AI integration. It excels in understanding many languages, learning quickly, and solving complex tasks. This makes GPT-4 a big leap forward in natural language processing (NLP).

As we look ahead, experts say we’ll see even more amazing things from GPT-4 and similar models. These models will get better, more useful, and used in many areas.

One exciting idea is combining GPT-4 with other AI, like seeing and learning. This multimodal AI mix could lead to huge breakthroughs. It could help GPT-4 solve even harder problems.

Also, training GPT-4 on lots of different data, including sounds and images, could make it even better. This could lead to new uses in self-driving cars, smart assistants, and more.

The future of AI integration is bright, thanks to GPT-4 and other models. They will change many industries, make work easier, and change how we use technology. The possibilities are endless, and the future of NLP is full of exciting changes that will shape our digital world.

The development of Large Language Models like GPT-4 has ushered in a new era of AI integration, pushing the boundaries of what’s possible in natural language processing.

Conclusion

Large Language Models (LLMs) are changing how we process language and many industries. They use deep learning and other advanced methods to get better at understanding language. This makes them key players in AI’s future.

Looking forward, we’ll see even more advanced and responsible LLMs. These technologies will change how we work and talk to computers. They will bring about big changes in fields like education and transportation.

The future of AI and LLMs is exciting but also uncertain. It’s important to use these tools wisely for the good of society. As we explore new possibilities, we must stay true to ethics and fairness.

FAQ

Q: What are Large Language Models (LLMs)?

A: Large Language Models are advanced neural networks that understand and create human language. They learn from huge amounts of text data. This lets them grasp the details of grammar, context, and cultural references.

Q: How do LLMs work?

A: LLMs use deep learning to process and create language that sounds human. They learn from vast amounts of text data. This helps them understand word and sentence relationships.

The transformer architecture, with its self-attention mechanisms, is key to many LLMs.

Q: What are the key components and evolution of LLMs?

A: LLMs have several important parts, like attention mechanisms and self-supervised learning. They’ve grown from simple models to complex ones like transformers. This has greatly improved their language skills.

Q: What is the current state of LLM technology?

A: LLM technology is getting better fast. Models like GPT-4 are doing better at different tasks. New trends include learning from text and images together and training more efficiently.

Q: What are the different types of LLMs and their capabilities?

A: There are many types of LLMs, each with its own strengths. GPT, BERT, XLNet, T5, RoBERTa, ALBERT, and ELECTRA are some examples. They’re good at tasks like text creation, understanding language, and learning from one task to another.

Q: What are the applications of LLMs across industries?

A: LLMs are used in many ways across different fields. In business, they help with chatbots, content creation, and data analysis. In healthcare, they analyze medical texts, help with diagnosis, and automate reports. In education, they offer personalized learning, tutoring, and help create educational content.

Q: What are the challenges and limitations of LLMs?

A: LLMs face several challenges. They need a lot of computing power to train and use. They can also learn biases from their data and sometimes create false information. Making sure the training data is diverse and accurate is a big challenge.

Q: What are the ethical considerations in LLM development and deployment?

A: Ethical issues in LLMs include avoiding biases, ensuring fairness, and protecting user data. There’s a risk of LLMs being used to spread false information or harm. It’s important to develop AI responsibly to avoid these problems.

Q: How will LLMs impact the future of natural language processing?

A: LLMs will keep changing how we handle natural language and many other areas. As technology gets better, we’ll see more advanced, efficient, and ethical LLMs. This will change how we work, communicate, and interact with AI.

Q: What is the difference between GPT-3 and GPT-4?

A: GPT-4 is like an upgraded version of GPT-3. It has more knowledge and can understand and generate text even better. While GPT-3 was already impressive, GPT-4 can handle more complex tasks and give more accurate answers.

Q: How do LLMs learn from text data?

A: LLMs learn by reading tons of text, just like how we learn from books and articles. They look for patterns in the words and sentences, which helps them understand how language works.

Q: Can LLMs understand different languages?

A: Yes! Many LLMs can understand and generate text in multiple languages. This means they can help people communicate across different languages, making it easier for everyone to connect.

Q: What is “hallucination” in LLMs?

A: Hallucination in LLMs happens when they create information that sounds real but isn’t true. It’s like when someone tells a story that isn’t based on facts. This can sometimes lead to confusion, so it’s important to double-check what they say.

Q: How are LLMs used in customer service?

A: In customer service, LLMs power chatbots that can answer questions and help customers 24/7. They make it easier for businesses to provide quick support without needing a person available all the time.

Q: Why is it important to have diverse training data for LLMs?

A: Having diverse training data means that LLMs learn from many different perspectives. This helps them avoid biases and ensures they treat everyone fairly when generating responses.

Q: What role do attention mechanisms play in LLMs?

A: Attention mechanisms help LLMs focus on the most important words in a sentence, kind of like how we pay attention to key details when reading. This makes their understanding of language much better.

Q: How can LLMs help with education?

A: LLMs can make learning more personal by providing tailored help with homework or creating educational materials like quizzes and lesson plans. They can even tutor students in subjects they’re struggling with!

Q: What are some potential future applications of LLMs?

A: In the future, we might see LLMs used in more areas like law, where they could help analyze legal documents, or in creative fields, helping writers brainstorm ideas or draft stories.

Q: How do multimodal models differ from traditional LLMs?

A: Multimodal models can understand and work with different types of information, like text, images, and sounds all at once. Traditional LLMs mainly focus on text alone, so multimodal models are much more versatile!

Q: Why do we need to think about ethics when using LLMs?

A: We need to consider ethics because LLMs can sometimes produce biased or unfair results based on their training data. It’s important to make sure they treat everyone equally and protect people’s privacy.

Q: What is transfer learning in the context of LLMs?

A: Transfer learning is when an LLM learns from one task and then uses that knowledge to do another task better. It’s like learning math concepts first and then using them to solve word problems.

Q: How can we ensure that AI remains fair and accountable?

A: To keep AI fair, we need to regularly check how it works and make sure it’s not being biased or unfair to anyone. This includes using diverse training data and being transparent about how decisions are made.

Q: What are some common misconceptions about LLMs?

A: One common misconception is that LLMs always give correct answers. While they are smart, they can still make mistakes or misunderstand things, so it’s always good to verify their information.

Q: How does fine-tuning work for specific tasks in LLMs?

A: Fine-tuning is when you take a general model that’s already learned a lot and teach it even more about a specific topic or task. This helps it perform better in areas where you want it to excel, like medical advice or customer support.

References and Recommended Reading

- Large Language Models: Understanding the Future of NLP – https://neuroflash.com/blog/large-language-models-understanding-the-future-of-nlp/

- Introduction to Large Language Models (LLMs): Understanding the Future of AI – https://medium.com/@samina.amin/introduction-to-large-language-models-llms-understanding-the-future-of-ai-554ac767d280

- What Are Large Language Models (LLMs)? | IBM – https://www.ibm.com/topics/large-language-models

- What are Large Language Models? | A Comprehensive LLMs Guide – https://www.elastic.co/what-is/large-language-models

- What are Large Language Models (LLMs)? | Definition from TechTarget – https://www.techtarget.com/whatis/definition/large-language-model-LLM

- Large Language Models [LLMs] – https://ashishjaiman.medium.com/large-language-models-llms-260bf4f39007

- Large language model (LLM): How does it work? | GrowthLoop – https://www.growthloop.com/university/article/llm

- Understanding Large Language Models (LLMs): A Comprehensive Guide – https://www.linkedin.com/pulse/understanding-large-language-models-llms-comprehensive-guide-vxfcc

- Understanding Large Language Models (LLMs): Some Practical Considerations and The Future of LLMs—… – https://medium.com/@shrihanbm/understanding-large-language-models-llms-some-practical-considerations-and-the-future-of-llms-9f4a7493fe7e

- Large language models: The basics and their applications – https://www.moveworks.com/us/en/resources/blog/large-language-models-strengths-and-weaknesses

- The future landscape of large language models in medicine – Communications Medicine – https://www.nature.com/articles/s43856-023-00370-1

- LLM Use Cases: One Large Language Model vs Multiple Models | HatchWorks – https://hatchworks.com/blog/gen-ai/llm-use-cases-single-vs-multiple-models/

- Large Language Models Use Cases and Applications – https://vectara.com/blog/large-language-models-use-cases/

- Large language models (LLMs): survey, technical frameworks, and future challenges – Artificial Intelligence Review – https://link.springer.com/article/10.1007/s10462-024-10888-y

- Large Language Models: Capabilities, Advancements, and Limitations [2024] | HatchWorks – https://hatchworks.com/blog/gen-ai/large-language-models-guide/

- Ethical Concerns Regarding the Use of Large Language Models in Healthcare – https://pmc.ncbi.nlm.nih.gov/articles/PMC10679759/

- Deconstructing The Ethics of Large Language Models from Long-standing Issues to New-emerging Dilemmas – https://arxiv.org/html/2406.05392v1

- Exploring the Horizon: The Future of GPT and Large Language Models in NLP – https://medium.com/@mervebdurna/exploring-the-horizon-the-future-of-gpt-and-large-language-models-in-nlp-33a6923c9291

- ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health – https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10166793/

- Large language models could change the future of behavioral healthcare: a proposal for responsible development and evaluation – npj Mental Health Research – https://www.nature.com/articles/s44184-024-00056-z

- NLP vs LLM: A Comprehensive Guide to Understanding Key Differences – https://medium.com/@vaniukov.s/nlp-vs-llm-a-comprehensive-guide-to-understanding-key-differences-0358f6571910

- Large Language Models (LLMs) and Natural Language Processing (NLP): The Future is Here. – https://www.trinityit.biz/article/large-language-models-llms-and-natural-language-processing-nlp-future-here

- Mixture of Experts Explained – https://huggingface.co/blog/moe

- 65+ Statistical Insights into GPT-4: A Deeper Dive into OpenAI’s Latest LLM – https://originality.ai/blog/gpt-4-statistics

- Number of Parameters in GPT-4 (Latest Data) – https://explodingtopics.com/blog/gpt-parameters

- GPT-4 architecture, datasets, costs and more leaked – https://the-decoder.com/gpt-4-architecture-datasets-costs-and-more-leaked/

- Breaking News: GPT-4 Model Architecture Revealed – Boasting 1.8 Trillion Parameters and a Mixed Expert Model! – https://www.inspire2rise.com/gpt-4-model-architecture.html

- OpenAI’s latest model has 1.8 trillion parameters and required 30 billion quadrillion FLOPS to train – https://www.allaboutai.com/ai-news/openai-model-trillion-parameters-billion-quadrillion-flops-train/

- GPT-4 Technical Report – https://arxiv.org/abs/2303.08774

- Artificial Intelligence (AI) Market Size, Share & Industry Analysis, By Component (Hardware, Software/Platform, and Services), By Function (Human Resources, Marketing & Sales, Product/Service Deployment, Service Operation, Risk, Supply-Chain Management, and Others (Strategy and Corporate Finance)), By Deployment (Cloud and On-premises), By Industry (Healthcare, Retail, IT & Telecom, BFSI, Automotive, Advertising & Media, Manufacturing, and Others), and Regional Forecast, 2024-2032 – https://www.fortunebusinessinsights.com/industry-reports/artificial-intelligence-market-100114

- Training a single AI model can emit as much carbon as five cars in their lifetimes – https://www.technologyreview.com/2019/06/06/239031/training-a-single-ai-model-can-emit-as-much-carbon-as-five-cars-in-their-lifetimes/

- Attention Is All You Need – https://arxiv.org/abs/1706.03762

- The Evolution of Language Models: From N-Grams to LLMs, and Beyond – https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4625356